本文章將介紹如何用Tensorflow識別出影像中的物件,

讓Object Detection模型可以偵測出影像中這四種物件,總計下載160張圖片並以人工方式標記(labelimg)

影像中的物件,再透過以下步驟3~8完成影像的物件識別,

像均未進行前處理。

1. 建立資料夾

首先建立專案的資料夾{project},名稱沒有限制

另外在{project}內建立5個資料夾config, data, images, model, testImages

config:放置訓練用的配置檔案

data:訓練與測試階段的CSV, tfrecord

images:作為建立模型用的圖片

model:存放訓練好的model

testImages:存放最後用來測試物件辨識的圖片

2. 準備影像

從Google中找尋圖片,並下載放在{project}/

wheelchair(輪椅傷患) 80張圖

crutch(拐杖傷患) 80張圖

wheelchair(輪椅傷患) 80張圖

crutch(拐杖傷患) 80張圖

3.標記物件

將下載到 images的所有圖片利用人工方式標記,這部分使用label

labelimg:https://github.com/

4.XML to CSV

先在images資料夾中建立兩個資料夾,

再將下列程式碼xml_to_csv.py放到{

import os

import glob

import pandas as pd

import xml.etree.ElementTree as ET

def xml_to_csv(path):

print('path:' + path)

xml_list = []

for xml_file in glob.glob(path + '/*.xml'):

print('xml_file:' + xml_file)

tree = ET.parse(xml_file)

root = tree.getroot()

for member in root.findall('object'):

value = (root.find('filename').text,

int(root.

int(root.

member[0]

int(

int(

int(

int(

)

xml_list.append(

column_name = ['filename', 'width', 'height', 'class', 'xmin', 'ymin', 'xmax', 'ymax']

xml_df = pd.DataFrame(xml_list, columns=column_name)

return xml_df

def main():

for directory in ['train', 'test']:

image_path = os.path.join(os.getcwd(), 'images/{}'.format(directory))

xml_df = xml_to_csv(image_path)

xml_df.to_csv('data/{}

print('Successfully converted xml to csv.')

main()

透過以下指令執行他

python xml_to_csv.py

看到執行成功的訊息後

xml_file:D:\microservices\AI\

...

xml_file:D:\microservices\AI\

xml_file:D:\microservices\AI\

xml_file:D:\microservices\AI\

xml_file:D:\microservices\AI\

xml_file:D:\microservices\AI\

xml_file:D:\microservices\AI\

xml_file:D:\microservices\AI\

Successfully converted xml to csv.

path:D:\microservices\AI\

xml_file:D:\microservices\AI\

xml_file:D:\microservices\AI\

xml_file:D:\microservices\AI\

xml_file:D:\microservices\AI\

xml_file:D:\microservices\AI\

xml_file:D:\microservices\AI\

xml_file:D:\microservices\AI\

xml_file:D:\microservices\AI\

Successfully converted xml to csv.

就表示CSV已經建立在{project}/data資料夾內

5.CSV to Tfeecord

將下列程式碼generate_tfrecord.py放到{project}

"""

Usage:

# From tensorflow/models/

# Create train data:

python generate_tfrecord.py --csv_input=data/train_labels.

# Create test data:

python generate_tfrecord.py --csv_input=data/test_labels.

"""

from __future__ import division

from __future__ import print_function

from __future__ import absolute_import

import os

import io

import pandas as pd

import tensorflow as tf

from PIL import Image

from object_detection.utils import dataset_util

from collections import namedtuple, OrderedDict

flags = tf.app.flags

flags.DEFINE_string('csv_

flags.DEFINE_string('output_

flags.DEFINE_string('image_

FLAGS = flags.FLAGS

# TO-DO replace this with label map

def class_text_to_int(row_label):

if row_label == 'wheelchair':

return 1

elif row_label == 'crutch':

return 2

else:

None

def split(df, group):

data = namedtuple('data', ['filename', 'object'])

gb = df.groupby(group)

return [data(filename, gb.get_group(x)) for filename, x in zip(gb.groups.keys(), gb.groups)]

def create_tf_example(group, path):

with tf.gfile.GFile(os.path.join(

encoded_jpg = fid.read()

encoded_jpg_io = io.BytesIO(encoded_jpg)

image = Image.open(encoded_jpg_io)

width, height = image.size

filename = group.filename.encode('utf8')

image_format = b'jpg'

xmins = []

xmaxs = []

ymins = []

ymaxs = []

classes_text = []

classes = []

for index, row in group.object.iterrows():

xmins.append(row['

xmaxs.append(row['

ymins.append(row['

ymaxs.append(row['

classes_text.append(

classes.append(class_

tf_example = tf.train.Example(features=tf.

'image/height': dataset_util.int64_feature(

'image/width': dataset_util.int64_feature(

'image/filename': dataset_util.bytes_feature(

'image/source_id': dataset_util.bytes_feature(

'image/encoded': dataset_util.bytes_feature(

'image/format': dataset_util.bytes_feature(

'image/object/bbox/

'image/object/bbox/

'image/object/bbox/

'image/object/bbox/

'image/object/class/

'image/object/class/

}))

return tf_example

def main(_):

writer = tf.python_io.TFRecordWriter(

path = os.path.join(FLAGS.image_dir)

examples = pd.read_csv(FLAGS.csv_input)

grouped = split(examples, 'filename')

for group in grouped:

tf_example = create_tf_example(group, path)

writer.write(tf_

writer.close()

output_path = os.path.join(os.getcwd(), FLAGS.output_path)

print('Successfully created the TFRecords: {}'.format(output_path))

if __name__ == '__main__':

tf.app.run()

其中上面程式碼的紅字部分,

# TO-DO replace this with label map

def class_text_to_int(row_label):

if row_label == 'wheelchair':

return 1

elif row_label == 'crutch':

return 2

else:

None

透過以下指令執行

python generate_tfrecord.py --csv_input=data/train_labels.

看到成功執行的訊息後,可在{project}/

Successfully created the TFRecords: /tf/dataset/people/data/train.

6.設定訓練的配置文件

首先要下載訓練model到{project}/

此範例以ssd_mobilenet_v1_coco為例

若想採用其他Model請下載對應的model和config檔

model可在以下連結下載

https://github.com/tensorflow/

model可在以下連結下載

https://github.com/tensorflow/

config文件可在以下連結下載

https://github.com/tensorflow/models/tree/master/research/object_detection/samples/configs

接著在{project}/data資料夾建立object-

文件內容如下,描述此模型可辨識到的物件

item {

id: 1

name: 'wheelchair'

}

item {

id: 2

name: 'crutch'

}

之後,再透過以下config配置相關參數(此處以ssd_

要修改部分以紅字表示,包含模型識別數量(num_

標籤檔案(PATH_TO_LABELS), 訓練步數(num_steps)

# SSD with Mobilenet v1, configured for Oxford-IIIT Pets Dataset.

# Users should configure the fine_tune_checkpoint field in the train config as

# well as the label_map_path and input_path fields in the train_input_reader and

# eval_input_reader. Search for "PATH_TO_BE_CONFIGURED" to find the fields that

# should be configured.

model {

ssd {

# 填入模型可識別出的分類數量

num_classes: 2

box_coder {

faster_rcnn_box_coder {

y_scale: 10.0

x_scale: 10.0

height_scale: 5.0

width_scale: 5.0

}

}

matcher {

argmax_matcher {

matched_threshold: 0.5

unmatched_threshold: 0.5

ignore_thresholds: false

negatives_lower_than_

force_match_for_each_

}

}

similarity_calculator {

iou_similarity {

}

}

anchor_generator {

ssd_anchor_generator {

num_layers: 6

min_scale: 0.2

max_scale: 0.95

aspect_ratios: 1.0

aspect_ratios: 2.0

aspect_ratios: 0.5

aspect_ratios: 3.0

aspect_ratios: 0.3333

}

}

image_resizer {

fixed_shape_resizer {

height: 300

width: 300

}

}

box_predictor {

convolutional_box_

min_depth: 0

max_depth: 0

num_layers_before_

use_dropout: false

dropout_keep_

kernel_size: 1

box_code_size: 4

apply_sigmoid_to_

conv_hyperparams {

activation: RELU_6,

regularizer {

l2_regularizer {

weight: 0.00004

}

}

initializer {

truncated_normal_

stddev: 0.03

mean: 0.0

}

}

batch_norm {

train: true,

scale: true,

center: true,

decay: 0.9997,

epsilon: 0.001,

}

}

}

}

feature_extractor {

type: 'ssd_mobilenet_v1'

min_depth: 16

depth_multiplier: 1.0

conv_hyperparams {

activation: RELU_6,

regularizer {

l2_regularizer {

weight: 0.00004

}

}

initializer {

truncated_normal_

stddev: 0.03

mean: 0.0

}

}

batch_norm {

train: true,

scale: true,

center: true,

decay: 0.9997,

epsilon: 0.001,

}

}

}

loss {

classification_loss {

weighted_sigmoid {

}

}

localization_loss {

weighted_smooth_l1 {

}

}

hard_example_miner {

num_hard_examples: 3000

iou_threshold: 0.99

loss_type: CLASSIFICATION

max_negatives_per_

min_negatives_per_

}

classification_weight: 1.0

localization_weight: 1.0

}

normalize_loss_by_num_

post_processing {

batch_non_max_

score_threshold: 1e-8

iou_threshold: 0.6

max_detections_per_

max_total_detections: 100

}

score_converter: SIGMOID

}

}

}

# 此處紅字是防止訓練模型時記憶體不夠造成process被kil

train_config: {

batch_size: 2

optimizer {

rms_prop_optimizer: {

learning_rate: {

exponential_decay_

initial_learning_

decay_steps: 800720

decay_factor: 0.95

}

}

momentum_optimizer_

decay: 0.9

epsilon: 1.0

}

}

fine_tune_checkpoint: "config/ssd_mobilenet_v1_coco_

from_detection_checkpoint: true

load_all_detection_

# Note: The below line limits the training process to 200K steps, which we

# empirically found to be sufficient enough to train the pets dataset. This

# effectively bypasses the learning rate schedule (the learning rate will

# never decay). Remove the below line to train indefinitely.

# 設定訓練步數

num_steps: 10000

data_augmentation_options {

random_horizontal_flip {

}

}

data_augmentation_options {

ssd_random_crop {

}

}

batch_queue_capacity: 60

num_batch_queue_threads: 30

prefetch_queue_capacity: 40

}

# 需要修改檔案路徑

train_input_reader: {

tf_record_input_reader {

input_path: "data/train.record"

}

label_map_path: "data/object-detection.pbtxt"

queue_capacity: 2

min_after_dequeue: 1

num_readers: 1

}

eval_config: {

metrics_set: "coco_detection_metrics"

num_examples: 1100

}

# 需要修改檔案路徑

eval_input_reader: {

tf_record_input_reader {

input_path: "data/test.record"

}

label_map_path: "data/object-detection.pbtxt"

shuffle: false

num_readers: 1

}

下載model, 接下來會用到object_detection的一些程式碼,

git clone https://github.com/

利用以下指令執行剛才下載model中的object_

python /tf/models/research/object_

成功運行畫面如下

完成畫面

透過tensorboard檢視訓練結果

tensorboard --logdir="/tf/dataset/people/

7. 導出模型

訓練完成後,在{project}目錄下,

python /tf/models/research/object_

--input_type image_tensor \

--pipeline_config_path ssd_mobilenet_v1_pets.config \

--trained_checkpoint_prefix training/model.ckpt-10000 \

--output_directory model

8.物件識別

上述動作實施完後,緊接者就是透過影像識別出我們期望的內容

首先複製原本的object_detection/

修改完的文件如下

{

"cells": [

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "V8-yl-s-WKMG"

},

"source": [

"# Object Detection Demo\n",

"Welcome to the object detection inference walkthrough! This notebook will walk you step by step through the process of using a pre-trained model to detect objects in an image. Make sure to follow the [installation instructions](https://github.

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "kFSqkTCdWKMI"

},

"source": [

"# Imports"

]

},

{

"cell_type": "code",

"execution_count": 0,

"metadata": {

"colab": {

"autoexec": {

"startup": false,

"wait_interval": 0

}

},

"colab_type": "code",

"id": "hV4P5gyTWKMI"

},

"outputs": [],

"source": [

"import numpy as np\n",

"import os\n",

"import six.moves.urllib as urllib\n",

"import sys\n",

"import tarfile\n",

"import tensorflow as tf\n",

"import zipfile\n",

"\n",

"from distutils.version import StrictVersion\n",

"from collections import defaultdict\n",

"from io import StringIO\n",

"from matplotlib import pyplot as plt\n",

"from PIL import Image\n",

"import pylab\n",

"\n",

"# This is needed since the notebook is stored in the object_detection folder.\n",

"sys.path.append(\"..\")\

"from object_detection.utils import ops as utils_ops\n",

"\n",

"if StrictVersion(tf.__version__) < StrictVersion('1.9.0'):\n",

" raise ImportError('Please upgrade your TensorFlow installation to v1.9.* or later!')\n"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "Wy72mWwAWKMK"

},

"source": [

"## Env setup"

]

},

{

"cell_type": "code",

"execution_count": 0,

"metadata": {

"colab": {

"autoexec": {

"startup": false,

"wait_interval": 0

}

},

"colab_type": "code",

"id": "v7m_NY_aWKMK"

},

"outputs": [],

"source": [

"# This is needed to display the images.\n",

"%matplotlib inline"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "r5FNuiRPWKMN"

},

"source": [

"## Object detection imports\n",

"Here are the imports from the object detection module."

]

},

{

"cell_type": "code",

"execution_count": 0,

"metadata": {

"colab": {

"autoexec": {

"startup": false,

"wait_interval": 0

}

},

"colab_type": "code",

"id": "bm0_uNRnWKMN"

},

"outputs": [],

"source": [

"from utils import label_map_util\n",

"\n",

"from utils import visualization_utils as vis_util"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "cfn_tRFOWKMO"

},

"source": [

"# Model preparation "

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "X_sEBLpVWKMQ"

},

"source": [

"## Variables\n",

"\n",

"Any model exported using the `export_inference_graph.py` tool can be loaded here simply by changing `PATH_TO_FROZEN_GRAPH` to point to a new .pb file. \n",

"\n",

"By default we use an \"SSD with Mobilenet\" model here. See the [detection model zoo](https://github.com/

]

},

{

"cell_type": "code",

"execution_count": 0,

"metadata": {

"colab": {

"autoexec": {

"startup": false,

"wait_interval": 0

}

},

"colab_type": "code",

"id": "VyPz_t8WWKMQ"

},

"outputs": [],

"source": [

"# What model to download.\n",

"MODEL_NAME = '/tf/dataset/people/model'\n",

"\n",

"# Path to frozen detection graph. This is the actual model that is used for the object detection.\n",

"PATH_TO_FROZEN_GRAPH = MODEL_NAME + '/frozen_inference_graph.pb'\

"\n",

"# List of the strings that is used to add correct label for each box.\n",

"PATH_TO_LABELS = os.path.join('/tf/dataset/

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "7ai8pLZZWKMS"

},

"source": [

"## Download Model"

]

},

{

"cell_type": "code",

"execution_count": 0,

"metadata": {

"colab": {

"autoexec": {

"startup": false,

"wait_interval": 0

}

},

"colab_type": "code",

"id": "KILYnwR5WKMS"

},

"outputs": [],

"source": [

"#opener = urllib.request.URLopener()\n",

"#opener.retrieve(

"#tar_file = tarfile.open(MODEL_FILE)\n",

"#for file in tar_file.getmembers():\n",

"# file_name = os.path.basename(file.name)\n"

"# if 'frozen_inference_graph.pb' in file_name:\n",

"# tar_file.extract(

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "YBcB9QHLWKMU"

},

"source": [

"## Load a (frozen) Tensorflow model into memory."

]

},

{

"cell_type": "code",

"execution_count": 0,

"metadata": {

"colab": {

"autoexec": {

"startup": false,

"wait_interval": 0

}

},

"colab_type": "code",

"id": "KezjCRVvWKMV"

},

"outputs": [],

"source": [

"detection_graph = tf.Graph()\n",

"with detection_graph.as_default():\

" od_graph_def = tf.GraphDef()\n",

" with tf.gfile.GFile(PATH_TO_FROZEN_

" serialized_graph = fid.read()\n",

" od_graph_def.

" tf.import_graph_def(

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "_1MVVTcLWKMW"

},

"source": [

"## Loading label map\n",

"Label maps map indices to category names, so that when our convolution network predicts `5`, we know that this corresponds to `airplane`. Here we use internal utility functions, but anything that returns a dictionary mapping integers to appropriate string labels would be fine"

]

},

{

"cell_type": "code",

"execution_count": 0,

"metadata": {

"colab": {

"autoexec": {

"startup": false,

"wait_interval": 0

}

},

"colab_type": "code",

"id": "hDbpHkiWWKMX"

},

"outputs": [],

"source": [

"category_index = label_map_util.create_

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "EFsoUHvbWKMZ"

},

"source": [

"## Helper code"

]

},

{

"cell_type": "code",

"execution_count": 0,

"metadata": {

"colab": {

"autoexec": {

"startup": false,

"wait_interval": 0

}

},

"colab_type": "code",

"id": "aSlYc3JkWKMa"

},

"outputs": [],

"source": [

"def load_image_into_numpy_array(

" (im_width, im_height) = image.size\n",

" return np.array(image.getdata()).

" (im_height, im_width, 3)).astype(np.uint8)"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "H0_1AGhrWKMc"

},

"source": [

"# Detection"

]

},

{

"cell_type": "code",

"execution_count": 0,

"metadata": {

"colab": {

"autoexec": {

"startup": false,

"wait_interval": 0

}

},

"colab_type": "code",

"id": "jG-zn5ykWKMd"

},

"outputs": [],

"source": [

"# For the sake of simplicity we will use only 2 images:\n",

"# image1.jpg\n",

"# image2.jpg\n",

"# If you want to test the code with your images, just add path to the images to the TEST_IMAGE_PATHS.\n",

"PATH_TO_TEST_IMAGES_DIR = '/tf/dataset/people/

"TEST_IMAGE_PATHS = [ os.path.join(PATH_TO_TEST_

"\n",

"# Size, in inches, of the output images.\n",

"IMAGE_SIZE = (12, 8)"

]

},

{

"cell_type": "code",

"execution_count": 0,

"metadata": {

"colab": {

"autoexec": {

"startup": false,

"wait_interval": 0

}

},

"colab_type": "code",

"id": "92BHxzcNWKMf"

},

"outputs": [],

"source": [

"def run_inference_for_single_

" with graph.as_default():\n",

" with tf.Session() as sess:\n",

" # Get handles to input and output tensors\n",

" ops = tf.get_default_graph().get_

" all_tensor_names = {output.name for op in ops for output in op.outputs}\n",

" tensor_dict = {}\n",

" for key in [\n",

" 'num_

" 'detection_

" ]:\n",

" tensor_name = key + ':0'\n",

" if tensor_name in all_tensor_names:\n",

" tensor_dict[

" tensor_

" if 'detection_masks' in tensor_dict:\n",

" # The following processing is only for single image\n",

" detection_boxes = tf.squeeze(tensor_dict['

" detection_masks = tf.squeeze(tensor_dict['

" # Reframe is required to translate mask from box coordinates to image coordinates and fit the image size.\n",

" real_num_

" detection_boxes = tf.slice(detection_boxes, [0, 0], [real_num_detection, -1])\n",

" detection_masks = tf.slice(detection_masks, [0, 0, 0], [real_num_detection, -1, -1])\n",

" detection_masks_

" detection_

" detection_masks_

" tf.greater(

" # Follow the convention by adding back the batch dimension\n",

" tensor_dict['

" detection_

" image_tensor = tf.get_default_graph().get_

"\n",

" # Run inference\n",

" output_dict = sess.run(tensor_dict,\n",

"

"\n",

" # all outputs are float32 numpy arrays, so convert types as appropriate\n",

" output_dict['num_

" output_dict['

" 'detection_

" output_dict['

" output_dict['

" if 'detection_masks' in output_dict:\n",

" output_dict['

" return output_dict"

]

},

{

"cell_type": "code",

"execution_count": 0,

"metadata": {

"colab": {

"autoexec": {

"startup": false,

"wait_interval": 0

}

},

"colab_type": "code",

"id": "3a5wMHN8WKMh"

},

"outputs": [],

"source": [

"for image_path in TEST_IMAGE_PATHS:\n",

" image = Image.open(image_path)\n",

" # the array based representation of the image will be used later in order to prepare the\n",

" # result image with boxes and labels on it.\n",

" image_np = load_image_into_numpy_array(

" # Expand dimensions since the model expects images to have shape: [1, None, None, 3]\n",

" image_np_expanded = np.expand_dims(image_np, axis=0)\n",

" # Actual detection.\n",

" output_dict = run_inference_for_single_

" # Visualization of the results of a detection.\n",

" vis_util.visualize_

" image_np,\n",

" output_dict['

" output_dict['

" output_dict['

" category_index,\n",

" instance_masks=

" use_normalized_

" line_thickness=8)\

" plt.figure(figsize=

" plt.imshow(image_np)\n"

" pylab.show()"

]

},

{

"cell_type": "code",

"execution_count": 0,

"metadata": {

"colab": {

"autoexec": {

"startup": false,

"wait_interval": 0

}

},

"colab_type": "code",

"id": "LQSEnEsPWKMj"

},

"outputs": [],

"source": []

}

],

"metadata": {

"colab": {

"default_view": {},

"name": "object_detection_tutorial.

"provenance": [],

"version": "0.3.2",

"views": {}

},

"kernelspec": {

"display_name": "Python 3",

"language": "python",

"name": "python3"

},

"language_info": {

"codemirror_mode": {

"name": "ipython",

"version": 3

},

"file_extension": ".py",

"mimetype": "text/x-python",

"name": "python",

"nbconvert_exporter": "python",

"pygments_lexer": "ipython3",

"version": "3.5.2"

}

},

"nbformat": 4,

"nbformat_minor": 1

}

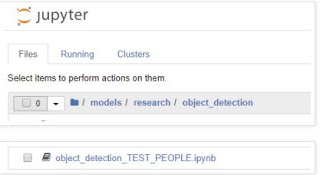

將object_detection_TEST_PEOPLE.

cp object_detection_TEST_

之後也可在jupyter編輯上面的文件內容

打開瀏覽器進入/tf/models/research/

一路RUN到底檢視結果

9 如何取得辨識內容?

修改models/research/object_detection/utils/visualization_utils.py

先宣告變數(取得內容用)

先宣告變數(取得內容用)

categories_detected = []

如下圖

在def visualize_boxes_and_labels_on_image_array() Method中修改如下紅字分別為

辨識類型:str(class_name)

分數百分比:int(100*scores[i])

分數:100*scores[i]

在def visualize_boxes_and_labels_on_image_array() Method中修改如下紅字分別為

辨識類型:str(class_name)

分數百分比:int(100*scores[i])

分數:100*scores[i]

global categories_detected

categories_detected = []

# Create a display string (and color) for every box location, group any boxes

# that correspond to the same location.

box_to_display_str_map = collections.defaultdict(list)

box_to_color_map = collections.defaultdict(str)

box_to_instance_masks_map = {}

box_to_instance_boundaries_map = {}

box_to_keypoints_map = collections.defaultdict(list)

if not max_boxes_to_draw:

max_boxes_to_draw = boxes.shape[0]

for i in range(min(max_boxes_to_draw, boxes.shape[0])):

if scores is None or scores[i] > min_score_thresh:

box = tuple(boxes[i].tolist())

if instance_masks is not None:

box_to_instance_masks_map[box] = instance_masks[i]

if instance_boundaries is not None:

box_to_instance_boundaries_map[box] = instance_boundaries[i]

if keypoints is not None:

box_to_keypoints_map[box].extend(keypoints[i])

if scores is None:

box_to_color_map[box] = groundtruth_box_visualization_color

else:

display_str = ''

if not skip_labels:

if not agnostic_mode:

if classes[i] in category_index.keys():

class_name = category_index[classes[i]]['name']

else:

class_name = 'N/A'

display_str = str(class_name)

if not skip_scores:

if not display_str:

display_str = '{}%'.format(int(100*scores[i]))

else:

display_str = '{}:{}%'.format(display_str, int(100*scores[i]))

box_to_display_str_map[box].append(display_str)

display_str = '{},{}%,{}'.format(str(class_name), int(100*scores[i]), 100*scores[i])

categories_detected.append(display_str)

if agnostic_mode:

box_to_color_map[box] = 'DarkOrange'

else:

box_to_color_map[box] = STANDARD_COLORS[

classes[i] % len(STANDARD_COLORS)]

categories_detected = []

# Create a display string (and color) for every box location, group any boxes

# that correspond to the same location.

box_to_display_str_map = collections.defaultdict(list)

box_to_color_map = collections.defaultdict(str)

box_to_instance_masks_map = {}

box_to_instance_boundaries_map = {}

box_to_keypoints_map = collections.defaultdict(list)

if not max_boxes_to_draw:

max_boxes_to_draw = boxes.shape[0]

for i in range(min(max_boxes_to_draw, boxes.shape[0])):

if scores is None or scores[i] > min_score_thresh:

box = tuple(boxes[i].tolist())

if instance_masks is not None:

box_to_instance_masks_map[box] = instance_masks[i]

if instance_boundaries is not None:

box_to_instance_boundaries_map[box] = instance_boundaries[i]

if keypoints is not None:

box_to_keypoints_map[box].extend(keypoints[i])

if scores is None:

box_to_color_map[box] = groundtruth_box_visualization_color

else:

display_str = ''

if not skip_labels:

if not agnostic_mode:

if classes[i] in category_index.keys():

class_name = category_index[classes[i]]['name']

else:

class_name = 'N/A'

display_str = str(class_name)

if not skip_scores:

if not display_str:

display_str = '{}%'.format(int(100*scores[i]))

else:

display_str = '{}:{}%'.format(display_str, int(100*scores[i]))

box_to_display_str_map[box].append(display_str)

display_str = '{},{}%,{}'.format(str(class_name), int(100*scores[i]), 100*scores[i])

categories_detected.append(display_str)

if agnostic_mode:

box_to_color_map[box] = 'DarkOrange'

else:

box_to_color_map[box] = STANDARD_COLORS[

classes[i] % len(STANDARD_COLORS)]

再回到object_detection_TEST_

# This is needed to display the images.

%matplotlib inline

for image_path in TEST_IMAGE_PATHS:

tStart = time.time()#計時開始

image = Image.open(image_path)

# the array based representation of the image will be used later in order to prepare the

# result image with boxes and labels on it.

image_np = load_image_into_numpy_array(image)

# Expand dimensions since the model expects images to have shape: [1, None, None, 3]

image_np_expanded = np.expand_dims(image_np, axis=0)

# Actual detection.

output_dict = run_inference_for_single_image(image_np, detection_graph)

# Visualization of the results of a detection.

vis_util.visualize_boxes_and_labels_on_image_array(

image_np,

output_dict['detection_boxes'],

output_dict['detection_classes'],

output_dict['detection_scores'],

category_index,

instance_masks=output_dict.get('detection_masks'),

use_normalized_coordinates=True,

min_score_thresh=.57,

line_thickness=2)

tEnd = time.time()#計時結束

costTime = 'It cost %.4f sec:' % (tEnd - tStart)

print("It cost %f sec:" + costTime)

plt.figure(figsize=IMAGE_SIZE)

print(image_path)

print(vis_util.categories_detected)

plt.imshow(image_np)

pylab.show()

%matplotlib inline

for image_path in TEST_IMAGE_PATHS:

tStart = time.time()#計時開始

image = Image.open(image_path)

# the array based representation of the image will be used later in order to prepare the

# result image with boxes and labels on it.

image_np = load_image_into_numpy_array(image)

# Expand dimensions since the model expects images to have shape: [1, None, None, 3]

image_np_expanded = np.expand_dims(image_np, axis=0)

# Actual detection.

output_dict = run_inference_for_single_image(image_np, detection_graph)

# Visualization of the results of a detection.

vis_util.visualize_boxes_and_labels_on_image_array(

image_np,

output_dict['detection_boxes'],

output_dict['detection_classes'],

output_dict['detection_scores'],

category_index,

instance_masks=output_dict.get('detection_masks'),

use_normalized_coordinates=True,

min_score_thresh=.57,

line_thickness=2)

tEnd = time.time()#計時結束

costTime = 'It cost %.4f sec:' % (tEnd - tStart)

print("It cost %f sec:" + costTime)

plt.figure(figsize=IMAGE_SIZE)

print(image_path)

print(vis_util.categories_detected)

plt.imshow(image_np)

pylab.show()

想請教一個問題

回覆刪除我在CSV轉record檔的時候無法成功

他寫無法使用utf-8編碼第67位置的byte 0xa8

請問這是我哪裡做錯了嗎

文檔格式可能不是utf-8所以出現錯誤'utf-8' codec can't decode byte 0xa8

刪除確保傳輸前後都使用Unicode碼,參考網址如下:

Python3解碼問題: 'utf-8' codec can't decode byte 0xa8 in position xx: invalid start byte

https://www.twblogs.net/a/5c869c8abd9eee35fc1433db

讀檔時採用encoding='utf-8',參考網址如下:

UnicodeDecodeError: 'utf8' codec can't decode byte 0x9c

https://stackoverflow.com/questions/12468179/unicodedecodeerror-utf8-codec-cant-decode-byte-0x9c

我嘗試過把csv檔用Excel打開並重新用Unicode(utf-8)存檔

刪除但問題也沒有解決